kubeflow国内完整的安装踩坑记录

环境准备

kubeflow 为环境要求很高,看官方要求:

at least one worker node with a minimum of:

- 4 CPU

- 50 GB storage

- 12 GB memory

当然,没达到也能安装,不过在后面使用中会出现资源问题,因为这是整包安装方案。

一个已经安装好的kubernetes集群,这里我采用的是rancher安装的集群。sudo docker run -d --restart=unless-stopped -p 80:80 -p 443:443 rancher/rancher

这里我选择的是k8s的1.14版本,kubeflow和k8s之间的版本兼容可以查看官网说明,这里我的kubeflow采用了0.6版本。

如果直接想安装可以直接调到kubeflow一键安装部分

kustomize

下载kustomize文件

官方的教程是用 kfclt 安装的,kfclt 本质上是使用了 kustomize 来安装,因此这里我直接下载 kustomize 文件,通过修改镜像的方式安装。

官方kustomize文件下载地址

git clone https://github.com/kubeflow/manifests |

文件比较多,可以用脚本分别导出,也可以用 kfctl 命令生成kfctl generate all -V:

kustomize/ |

ambassador 微服务网关argo 用于任务工作流编排centraldashboard kubeflow的dashboard看板页面tf-job-operator 深度学习框架引擎,一个基于tensorflow构建的CRD,资源类型kind为TFJobkatib 超参数服务器

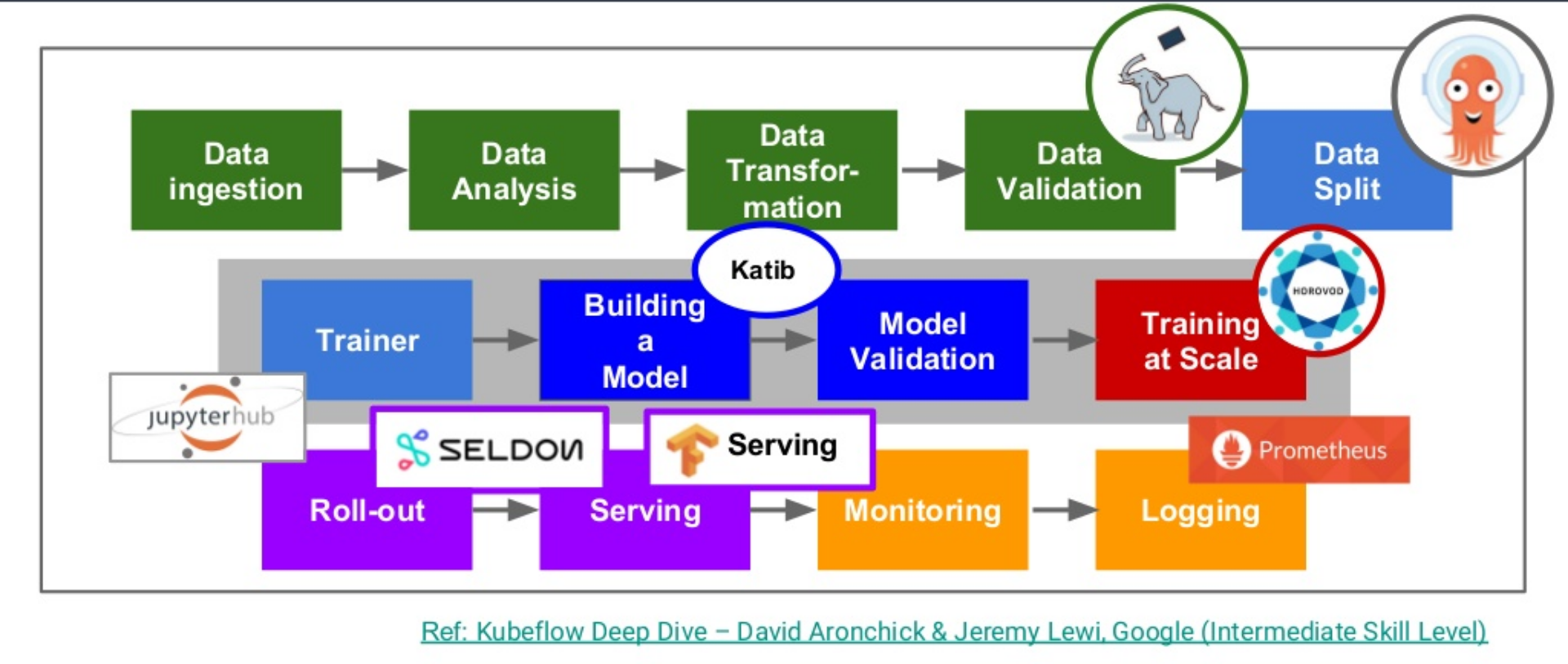

机器学习套件使用流程

修改kustomize文件

修改kustomize镜像

修改镜像:grc_image = [

"gcr.io/kubeflow-images-public/ingress-setup:latest",

"gcr.io/kubeflow-images-public/admission-webhook:v20190520-v0-139-gcee39dbc-dirty-0d8f4c",

"gcr.io/kubeflow-images-public/kubernetes-sigs/application:1.0-beta",

"gcr.io/kubeflow-images-public/centraldashboard:v20190823-v0.6.0-rc.0-69-gcb7dab59",

"gcr.io/kubeflow-images-public/jupyter-web-app:9419d4d",

"gcr.io/kubeflow-images-public/katib/v1alpha2/katib-controller:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/katib-manager:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/katib-manager-rest:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/suggestion-bayesianoptimization:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/suggestion-grid:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/suggestion-hyperband:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/suggestion-nasrl:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/suggestion-random:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/katib/v1alpha2/katib-ui:v0.6.0-rc.0",

"gcr.io/kubeflow-images-public/metadata:v0.1.8",

"gcr.io/kubeflow-images-public/metadata-frontend:v0.1.8",

"gcr.io/ml-pipeline/api-server:0.1.23",

"gcr.io/ml-pipeline/persistenceagent:0.1.23",

"gcr.io/ml-pipeline/scheduledworkflow:0.1.23",

"gcr.io/ml-pipeline/frontend:0.1.23",

"gcr.io/ml-pipeline/viewer-crd-controller:0.1.23",

"gcr.io/kubeflow-images-public/notebook-controller:v20190603-v0-175-geeca4530-e3b0c4",

"gcr.io/kubeflow-images-public/profile-controller:v20190619-v0-219-gbd3daa8c-dirty-1ced0e",

"gcr.io/kubeflow-images-public/kfam:v20190612-v0-170-ga06cdb79-dirty-a33ee4",

"gcr.io/kubeflow-images-public/pytorch-operator:v1.0.0-rc.0",

"gcr.io/google_containers/spartakus-amd64:v1.1.0",

"gcr.io/kubeflow-images-public/tf_operator:v0.6.0.rc0",

"gcr.io/arrikto/kubeflow/oidc-authservice:v0.2"

]

doc_image = [

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.ingress-setup:latest",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.admission-webhook:v20190520-v0-139-gcee39dbc-dirty-0d8f4c",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.kubernetes-sigs.application:1.0-beta",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.centraldashboard:v20190823-v0.6.0-rc.0-69-gcb7dab59",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.jupyter-web-app:9419d4d",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.katib-controller:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.katib-manager:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.katib-manager-rest:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.suggestion-bayesianoptimization:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.suggestion-grid:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.suggestion-hyperband:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.suggestion-nasrl:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.suggestion-random:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.katib.v1alpha2.katib-ui:v0.6.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.metadata:v0.1.8",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.metadata-frontend:v0.1.8",

"registry.cn-shenzhen.aliyuncs.com/shikanon/ml-pipeline.api-server:0.1.23",

"registry.cn-shenzhen.aliyuncs.com/shikanon/ml-pipeline.persistenceagent:0.1.23",

"registry.cn-shenzhen.aliyuncs.com/shikanon/ml-pipeline.scheduledworkflow:0.1.23",

"registry.cn-shenzhen.aliyuncs.com/shikanon/ml-pipeline.frontend:0.1.23",

"registry.cn-shenzhen.aliyuncs.com/shikanon/ml-pipeline.viewer-crd-controller:0.1.23",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.notebook-controller:v20190603-v0-175-geeca4530-e3b0c4",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.profile-controller:v20190619-v0-219-gbd3daa8c-dirty-1ced0e",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.kfam:v20190612-v0-170-ga06cdb79-dirty-a33ee4",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.pytorch-operator:v1.0.0-rc.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/google_containers.spartakus-amd64:v1.1.0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/kubeflow-images-public.tf_operator:v0.6.0.rc0",

"registry.cn-shenzhen.aliyuncs.com/shikanon/arrikto.kubeflow.oidc-authservice:v0.2"

]

修改PVC,使用动态存储

修改pvc存储,采用local-path-provisioner动态分配PV。

安装local-path-provisioner:kubectl apply -f https://raw.githubusercontent.com/rancher/local-path-provisioner/master/deploy/local-path-storage.yaml

如果想直接在kubeflow中使用,还需要将StorageClass改为默认存储:...

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-path

annotations:

storageclass.beta.kubernetes.io/is-default-class: "true"

provisioner: rancher.io/local-path

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Delete

...

完成后可以建一个PVC试试:apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: local-path-pvc

namespace: default

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

注:如果没有设为默认storageclass需要在PVC加入storageClassName: local-path进行绑定

一键安装

这里我制作了一个一键启动的国内镜像版kubeflow项目:

https://github.com/shikanon/kubeflow-manifests

中间踩过的坑

Coredns CrashLoopBackOff 问题

log日志:kubectl -n kube-system logs coredns-6998d84bf5-r4dbk

E1028 06:36:35.489403 1 reflector.go:134] github.com/coredns/coredns/plugin/kubernetes/controller.go:322: Failed to list *v1.Namespace: Get https://10.96.0.1:443/api/v1/namespaces?limit=500&resourceVersion=0: dial tcp 10.96.0.1:443: connect: no route to host

E1028 06:36:35.489403 1 reflector.go:134] github.com/coredns/coredns/plugin/kubernetes/controller.go:322: Failed to list *v1.Namespace: Get https://10.96.0.1:443/api/v1/namespaces?limit=500&resourceVersion=0: dial tcp 10.96.0.1:443: connect: no route to host

log: exiting because of error: log: cannot create log: open /tmp/coredns.coredns-8686dcc4fd-7fwcz.unknownuser.log.ERROR.20191028-063635.1: no such file or directory

防火墙(iptables)规则错乱或者缓存导致的,解决方案:iptables --flush

iptables -tnat --flush

该操作会丢失防火墙规则